This tutorial will guide you through using S3 buckets to upload media files and using lambda functions to automatically trigger a transcoding job which saves output files to another bucket. This can be used to create audio and video files of different quality and formats for serving users.

Create Amazon S3 Buckets

Create two S3 buckets – one for uploading your media files, another one to save, and distribute the transcoded files. The first bucket that stores your uploads can be private whereas the second one needs to be public if you want to use it to distribute the files. Select a region closest to where most of your visitors/consumers are. You can create buckets from https://s3.console.aws.amazon.com/s3/buckets/.

Create a Pipeline in AWS Elastic Transcoder

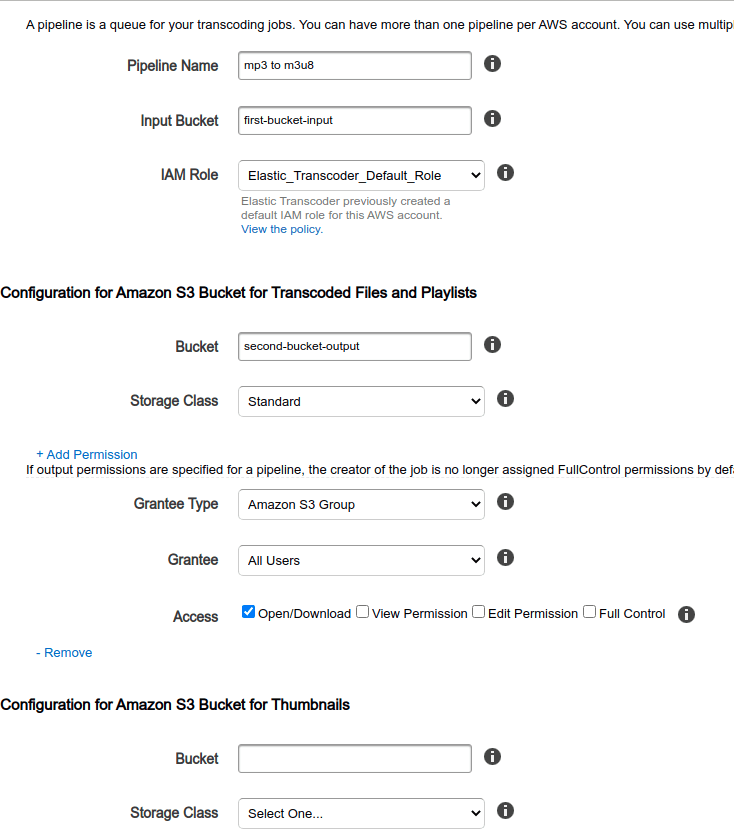

You can access the Elastic Transcoder console from https://console.aws.amazon.com/elastictranscoder/. Selecting the same region for your transcoder and S3 buckets may save you some costs. Now create a new Pipeline. Select the appropriate buckets for input and output. To make the transcoded files publicly accessible, provide Open/Download access to All Users as shown in the screenshot below.

Once the pipeline is created, view its details to note-down the Pipeline ID.

A transcoding task is a Job in Elastic Transcoder. While the details for input bucket, output bucket, and permissions are saved in Pipeline, other details for converting the file is provided in the Job. Each job needs a pipeline.

Creating new jobs manually is time-consuming, hectic, and prone to errors. We will automate this using Lambda functions and S3 notifications. As soon as we finish uploading a new file to the first S3 bucket, a notification will fire to trigger our Lambda function which will then create an Elastic Transcoder Job to transcode the uploaded file and save it to the second bucket, ready for consumption.

Creating a role for Lambda execution

- Visit https://console.aws.amazon.com/iam/home#/roles and create a new role.

- Choose Lambda as the common use case.

- Under permissions, attach the policies AWSLambdaBasicExecutionRole and AmazonElasticTranscoder_FullAccess

- Follow the next steps to complete creating the role.

Creating the Lambda function

import json

import os

import boto3

def lambda_handler(event, context):

pipeline_id = '[pipeline-id]'

hls_audio_160k_preset_id = '1351620000001-200060' # HLS Audio - 160k

segment_duration = '15'

transcoder = boto3.client('elastictranscoder')

out = []

for record in event['Records']:

bucket = record['s3']['bucket']['name']

key = record['s3']['object']['key']

# Strip the extension, Elastic Transcoder automatically adds one

key_sans_extension = os.path.splitext(key)[0]

outputs = [

{

'Key': key_sans_extension,

'PresetId': hls_audio_160k_preset_id,

'SegmentDuration': segment_duration,

}

]

response = transcoder.create_job(PipelineId=pipeline_id,

Input={'Key': key},

Outputs=outputs,

)

print(response)

out.append(response)

return {

'statusCode': 200,

'body': out

}

The above code produces an HLS (m38u) audio stream from the input file. You can use other presets as well. Multiple output files can also be generated by providing different keys and preset IDs in multiple dictionaries within the output array.

Notifying Lambda for new uploads

- Visit https://s3.console.aws.amazon.com/s3/buckets/ and open the first bucket, the one where media is to be uploaded.

- Under Properties, scroll down to Event Notifications.

- Click Create event notificaton.

- Give the notification a name, select PUT, and Multipart upload completed events. Multipart upload completed event is trigger when large files are uploaded.

- If the lambda is to be triggered only for particular folders/prefixes or file extension, specify using Prefix and/or Suffix fields.

- Select Lambda Function for notification destination. Then, pick the lambda function created earlier and save the form.